ASRock Z97 Extreme9 - Power loss on high load

Printed From: ASRock.com

Category: Technical Support

Forum Name: Intel Motherboards

Forum Description: Question about ASRock Intel Motherboards

URL: https://forum.asrock.com/forum_posts.asp?TID=1661

Printed Date: 24 Feb 2026 at 1:35pm

Software Version: Web Wiz Forums 12.04 - http://www.webwizforums.com

Topic: ASRock Z97 Extreme9 - Power loss on high load

Posted By: CosmiChaos

Subject: ASRock Z97 Extreme9 - Power loss on high load

Date Posted: 05 Jan 2016 at 9:15am

|

Hello, my systems featuring the ASRock Z97 Extreme9 is losing power / shuts down unexpected during high load. First of all heres my system spec: CPU: Intel Haswell 4790K MEM: Teamgroup 16GB 2666Mhz CL11 GPUs: 2x Gigabyte GeForce 780Ti GHZ M.2: Samsung XP941 PSU: Enermax Platimax 1350W Detailed description of the symptoms: It started short time after i build the PC from spare parts, first of all it was just rarely freezing/stutter every 2 or 3 days during gaming. Then i stopped gaming for personal reasons for about half a year barely not touched the PC. Then it was first still the same, nothing to worry greatly, but it started getting worse, more and more often stutter/freezes/blackscreens... sometimes one graphic cards just resets (find out in Afterburner) but PC/OS kept running. Finally PC startet to shut down unexpectedly during high usage. Things tried to reproduce: Solely High CPU-Load (FPU-Bench/with OC enabled): Not reproducable/Stable Only one graphic card plugged in: Not reproducable/Stable Disable SLI test each card: Same unexpected shutdowns. I tested the GPUs in a different PC with heavy OC, they are both OK individually, but could not try SLI because i have no second PSU of that wattage. I have tried various Nvidia drivers. I have tried a different SLI-Bridge. Ive checked all cables, tried different ones and tried different ways of distributing power from the rails including enermax recommendations to oversplit dual-link-cables over card1&2... all the same. Ive watched temps, no card is ever exceeding 75°C, the system is on very good cooling. Soooo actually i high likely think that the high-quality Enermax-PSU is failing thats why I actually RMA it.... thats exactly NOT the reason why i bought Enermax-PSU... somehow i cannot believe this. And I have a slight feeling that it MUST NOT be the PSU.... it could be a fail of the Motherboard either. I think it could be a problem of the motherboard that takes 16 PCIe Lanes from the Haswell CPU and puts it onto the PEX 8474 for mirroring it to the SLI. I switched to UEFI v1.90 lately... but i don't think it's related to FW, because first after doing that it was all ok. I really think whatever im facing is definetly a hardware bug. Is anybody else having an idea? Actually i wait for my PSU beeing checked and if failing beeing replaced.... but Im so scared that they cannot find a failure in their lab :(. If the PSU turns out to be ok... first thing I do is sending this board back to the reseller!

|

Replies:

Posted By: parsec

Date Posted: 05 Jan 2016 at 11:38am

|

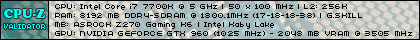

Your PC's hardware listing is incomplete to be able to diagnose your problem, although I have an idea about what is happening that I will describe below. What kind of PC case are you using? How many and what size fans are you using in the PC case, and how do you control their speed? What type of CPU cooler are you using? Have you ever watched the CPU temperature while you are gaming? You have two video cards that vent most of their heat into the PC case. Unless that heat is removed quickly from the PC case, the heated air is used to try to cool the CPU with your unknown CPU cooler. So you could easily have a CPU over heating problem if you are using the stock Intel CPU cooler. Your Enermax Platimax PSU has a specification of 1350W. Let's look at the output on its multiple rail 12V design:  We can see the PSU has four +12V rails rated at 30 Amps each, and two +12V rails rated at 20 Amps each. The total +12V output capacity is 1350W, which is 112.5 Amps x 12V = 1350 Watts. The potential problem I see here is the Amp rating of each of the six +12V rails. Four rails at 30 Amps each is 120 Amps total, over the 1350W/112.5Amp rating. Add to the 120 Amps, the two rails at 20 Amps each (40 total), for a total of 160 Amps. 160 Amps x 12V = 1920 Watts. My point here is the individual power rating of each of the six +12V rails cannot be maintained if all of them were in use at their full capacity. Even if you did not use the two 20 Amp +12V rails, the four 30 Amp rails would be beyond the maximum capacity of the PSU. This does not even include the the +5V and +3.3V load on the PSU, which will further reduce the total power available. If you were using 20Amps from each of the six 12V rails, that is still 1440W/120Amps, again over the 1350W maximum rating of the PSU. As you said you already tried to "oversplit", or distribute the power needed for each video card across as many of the +12V rails as possible. Each video card requires two eight pin power connectors, for a total of four. Do you have one eight pin cable connected to each of the four 30Amp +12V outputs? That would be the best way to supply power to your two video cards. In reality, it is probably not that the PSU cannot supply enough power, but it is the power limits on each of the +12V rails that may be causing the PSU to shut off power to the video cards. I don't know the true amount of power the video cards are using, but I wanted to explain to you how it is possible for a PSU design like yours to fail IF it is not connected correctly to the video cards, etc, in a PC. If the power usage on one of the +12V rails goes beyond the 20A or 30A rating, it will shut off. Your mother board has an extra power connector at the bottom of the board to supply extra 12V power to the PCIe x16 slots. Do you have a cable connected to that connector? Your board has two, eight pin CPU power connectors at the top of the board. Unless your CPU is highly over clocked, you do not need to connect cables to both of those connectors, one eight pin connector/cable will be enough. A mother board will turn off the PC if the CPU over heats, or if the PSU shuts off power to one or more components in the PC, like a video card or the CPU. I'll let others comment about your problem, but I would like to you to answer the questions I asked at the beginning of this post. ------------- http://valid.x86.fr/48rujh" rel="nofollow">

|

Posted By: wardog

Date Posted: 05 Jan 2016 at 5:31pm

Seeings a card is dropping out, it'd be a safe bet fron that answer is no. Yet I've not seen one just up and do what the OP says either. This board is no slouch when it comes to onboard goodies, maybe connect both 8-pins at the top AND the PCIE Power at the bottom. Also, knowing it's made with "spare' parts, check and or change out your two graphics cards bridge. ....

Here here. I too would like an idea of what the OPs system consists of. |

Posted By: CosmiChaos

Date Posted: 07 Jan 2016 at 1:08pm

|

So many things. 1. Heat is no problem. I have multiple 120mm and 140mm for in and out-airstreams. Most fans are controlled via the board, some via the cases fan-control. 2. The cases fan-control features 2 channels each up to 15W, each having 2x120mm connected. No problem here. 3. The boards molex connecter WAS plugged in.... the manual and ASRock supported tells that this plug mitght add additional stability if "MORE THAN 3 CARDS (4!!!) are used. I removed it, because PCIe#5 is not in use, but still having the SAME issue. 4. parsec... your explanation to the rail-power-distrubation doesn't help me. As I said i had each of the 30A rails (4x) connected to one socket of each 780TI (power rating max. 350W). I plugged off all peripherals (thx to M.2-Ultra) to not put share on the rails connected to the primary SLI card and shared the secondary cards second port with the rails that is also sharing the additional 8-pin MOBO-powersocket. The single rail ratings on the labels in fact only shows when the OVER-CURRECT-PROTECTION per each rail kicks in. The combined power-rating of the 30A-rails is ~112A. Thats more more than sufficient for about 800W max demand. So while one graphic card may suck up to 350W on 12V (~30A per card)... but each card has two connectors, thats why IMHO it is not wise to oversplit one rail over both connectors and therefore I intentionally used single rail per connector. Using one rail on both connectors of each card is what the stupid Enermax support suggested as a quick hint/try before RMAing. To make it short: I have a watt-o-meter... and NO, it is impossible that more than 112A via 12V beeing sucked from MOBO and GPUs because PC overall is below 1kW. 5. SLI-Bridge has of course been tested against a different bridge... no change. 6. I repeat that both of the GPUs do fine when i unplug the other, even with quite max OC. 7. "Have you ever watched your CPU-temp"... LOL. I installed and configured an 140mm-framed-window fan to suck of heat from the GPU-area (not from the fans, haha, only them coolers) when the CPU is beeing used. I ran stable FPU-burn-in with 95°C, linpack with 72°C and gaming usually is only below 60°C.... so... wait...stop ask me things like if you think Im a retard. BTW: The CPU-watercooler is a Corsair Hydro H110i. :p. Oh dear. I read the motherboards manual. I read the PSUs label. The molex on the downside is of no use in my case, because it distributes power to a PCIe-slot that i have even nothing plugged in. I know GPUs and CPUs produce heat. Can you start talking with an It-systemelectrician about whats the real problem here or you want to fool around furthermore? I exactly know my FINE CPU-temp. WTF. Dont you think somebody who spends thousands of bucks to build is own PC from spare parts knows what he is doing? WHat i wanna know from Asrock is following: This board features a PEX, and it features an additional PCIe switch. My CPU i4790k has exactly 16 PCIe Gen3 Lanes. The PEX allows multi-casting that 16 to both cards. So far so good. I use a Samsung XP941 M.2-Ultra that is connected via PCIe 4x Gen3. How comes GPU-Z tells me that both card can still handle PCIe 16x Gen3, while the XP941 is accessible at PCIe 4x Gen3 the same time?????????? What happens when the game actively accessing the game-data on the M.2-Ultra and at that moment game/driver/gpu-subsystem exceeds 8x/(possibly12x) and really has a demand for 16x PCI-Gen3??? May this magic cause failing and be the reason of power-loss?

|

Posted By: wardog

Date Posted: 07 Jan 2016 at 3:08pm

|

Well, I for one will refer you to use the ASRock Tech Support link below please. Like you we are Users here ourselves. But to be spoke to in that tone I will not be party to. We here haven't a clue what ones knowledge and or skill level is when they first post. Nor do we usually know what has been tried on their end, which usually results in 3-4 days of back and forth here before we get a final picture of what they have tried. http://event.asrock.com/tsd.asp" rel="nofollow - http://event.asrock.com/tsd.asp Unsubscribed, Good luck with this. I hope you get it solved with ASRock Tech Support. |

Posted By: CosmiChaos

Date Posted: 07 Jan 2016 at 11:58pm

Seeing a moron - that makes fun of thinking other people are idiots - dropping out from the topic, it'd be a safe bet i dont fracking care!

So why dont you ask instead of speculating any user, because of what he not said, must be an idiot. You were just wrong with anything you said... all connectors were in, SLI bridge of cause has been tested. What may be the next smart thing for you to ask me, because i didn't tell yet: Maybe if drivers are fault.... damn i tried different drivers. And no i wont contact ASRck... i will contact my reseller with a delivery of this board, when my PSU re-arrives without an error. Because there is nothing left to be faulty than the ASRock board. Enermax just informed me it will take 10-14 days from now on. Stay tuned.

|

Posted By: CosmiChaos

Date Posted: 09 Jan 2016 at 12:20am

|

OK so nobody on this planet can tell me the magic PCIe-switch is doing to hook the PEX with the SLI at 16 lanes while at the same time hooking the M.2-U with OS and game data at 4 lanes, thats 20 in sum, but the CPU only features 16. The board reports to nvidia that both cards can take use of 16lanes. Seriously nvidia dont have a 12x-mode so they must virtually address 16 lanes or 8 lanes. So I think when PCIe bus for my powerful 780Ti GHZ Dual-SLI tries to burst at higher speeds than 12x while OS/dame bursts reading data at higher speeds than 4x... the hole system instantly kind of "segfaults". Is there any way to force the SLI-mode down to 8x/8x instead of 16x/16x? The UEFI doesn't feature any options regarding that :(

|

Posted By: Xaltar

Date Posted: 09 Jan 2016 at 12:51am

|

If you cannot respect your fellow forum members who are simply trying to understand your problem and provide assistance please contact Tech support directly. Thread locked.

|

parsec wrote:

parsec wrote: