Asrock 970M Pro 3 Troubleshooting?

Printed From: ASRock.com

Category: Technical Support

Forum Name: AMD Motherboards

Forum Description: Question about ASRock AMD motherboards

URL: https://forum.asrock.com/forum_posts.asp?TID=2253

Printed Date: 29 Dec 2025 at 9:11am

Software Version: Web Wiz Forums 12.04 - http://www.webwizforums.com

Topic: Asrock 970M Pro 3 Troubleshooting?

Posted By: KingOfKatz

Subject: Asrock 970M Pro 3 Troubleshooting?

Date Posted: 17 Mar 2016 at 9:28am

|

Greetings I have a small project where I am upgrading a few of our office HP Pavillions to extend their useful lives. Only the case, multi I/O front panels and drives are original. The power supplies and graphics cards had been upgraded several years ago. Current specs are as follows: Asrock 970M Pro 3 motherboard AMD FX 8320E cpu G.Skill Ripjaws X Series 2 x 4GB PC3 10666 ram RAIJINTEK AIDOS Heatsink with 4 6mm heatpipes and 90mm fan GELID 92mm Silent 9 case fan Sata RAID 1 MSI Nvidia GTX graphics card Thermaltake 600W TR2 power supply Windows 7 Pro I brought the first upgraded Pavillion up last Friday afternoon March 11th. The system runs very cool (~24C cpu, ~23C mb) much quieter than the original mb cpu fan combo and about as fast as some of the Asus intel I5 3570s we have. Close enough that you would need to bench mark to see a noticeable difference. These Pavillions will be running a lot of apps, so I am about a little more than halfway done building the disk image. The last couple of nights, I was pretty sure that had shut down the PC from the Windows menu. I walked to the other side of the room to grab some more coffee, came back to my work area and the PC was back at the Windows log in screen. I did a shut down again and watched it, and it did shut down. This morning when I turn it on, it halts at the UEFI logo menu screen. If I press F2 or Del, the screen goes black for about 2 seconds then comes back to the menu and is unresponsive to the keyboard after that. I could however still get into the RAID controller and it appeared that I could still do whatever I wanted although I did not verify all because I don't particularly want to wipe the drives and loose a couple of days of work just yet. I did perform a CMOS reset and now it halts at the UEFI logo menu screen and is no longer responsive to the keyboard. Anybody have any suggestions to determine if this is a defective motherboard or rule that out? Any workarounds to get into the UEFI settings or boot beyond the menu? Thanks in advance

|

Replies:

Posted By: Xaltar

Date Posted: 17 Mar 2016 at 1:09pm

|

That sounds like corruption in the UEFI by the symptoms. Try performing a full CMOS clear using http://forum.asrock.com/forum_posts.asp?TID=630&title=how-to-clear-cmos-via-battery-removal" rel="nofollow - this method (an hour should suffice). If you are still getting stuck at post try disconnecting your hard disks and powering on. There could be an issue with the RAID setup. -------------

|

Posted By: parsec

Date Posted: 17 Mar 2016 at 1:18pm

|

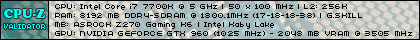

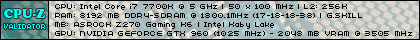

It sounds like the PC is failing to POST for some reason. Clearing the CMOS will force a prompt to enter the UEFI UI upon restart, but that assumes POST can complete. Was the RAID 1 array still functional when you were in the RAID utility? Disconnection of all other hardware to test the startup is one way to debug this situation. Do you have the POST beep speaker connected to the board? If not, one might provide some clues. ------------- http://valid.x86.fr/48rujh" rel="nofollow">

|

Posted By: KingOfKatz

Date Posted: 18 Mar 2016 at 6:35am

|

I followed your outlined method. I let t set overnight without a battery. It did take two power cycles to finally clear to default once the battery was placed back in.  |

Posted By: KingOfKatz

Date Posted: 18 Mar 2016 at 7:18am

The Raid 1 array is still marked as functional. But apparently it seems that it is the current raid array that may be causing the issues. The system is currently halting right after the AMD bios date is posted and just before the non-graphical UEFI menu. I swapped in a SSD from another working system and it completed the boot up process even though I did not define another raid array. However if any of the original raid array members are connected to any port, the system will halt. Since this array was working fine from the very first day, any ideas on what might have happened? Is there a way to recover the array? If I undefined the array, would 1 of the former members be accessible so I can perform a partial data recovery? A post beep speaker would be good to have and these HP a6250 cases do not have any. I pulled apart several decommissioned servers and desktops and to my surprise none except for an old NT4 HP USFF had one and it was soldered to the motherboard.  I'll drop by Radio Shack after today and see if I can find one to keep in my tool pouch. I'll drop by Radio Shack after today and see if I can find one to keep in my tool pouch.Thanks for the help

|

Posted By: parsec

Date Posted: 18 Mar 2016 at 10:41am

I'm confused now, as there is new information in this post that I don't see in the first post. It seems you moved a RAID 1 array from the original HP Pavillion to the 970M Pro4 board? I highlighted the statement in your most recent post that indicates that is the case. That was not mentioned in your first post. You also wrote, "... current raid array that may be causing the issues". So we have current and original RAID arrays? It seems you are using the RAID arrays from the HP Pavillions to help build the new RAID arrays? If so, any idea what the original boards in the HP Pavillions are? You may have a simple RAID incompatibility between the old and new boards. ------------- http://valid.x86.fr/48rujh" rel="nofollow">

|

Posted By: KingOfKatz

Date Posted: 18 Mar 2016 at 6:51pm

|

Thanks for your time and sorry about the confusing statements. I hope I'm not going to make it more confusing by trying to explain it but here goes. Sorry about the length. Drives are tested for SMART, surface, and temperature issues before reuse. The drives used for the raid array on the 970m pro3 build were originally from a raid array created on the same PC but with the old Asus based HP motherboard, I believe an old custom LE series. Surprisingly the 970m pro3 actually recognized the old array when first connected to the 970m pro3 and booted up no problem. The OS just complained about missing drivers. However I was creating a clean image with a different OS so I therefore used the AMD raid controller on the 970m pro3 to create and full format a new 970m pro3 based array. In my post this is both known as the current and original raid array for the project build which appears to be causing the system to halt at the UEFI screen after having a 4 day runtime without issues, which included multiple user initiated shutdowns and reboots. During the post CMOS reset testing that was done yesterday, this array is known as the 'current array' prior to the addition of additional test drive and afterwards known as the 'original array' when used along with the additional test drive to distinguish the difference between the two test array sets. Not sure how to word it any better. Same array with and without additional non member drive. Recap of tests performed after successful CMOS reset: -Using current/original array member set First test - all drives except DVDR disconnected. System would boot to DVD Second test - reconnected original raid array verified functional by raid utility. System halt before UEFI. Third set of tests - disconnected each of the members of the array one at a time and verified array degrade by raid utility. System halt before UEFI Fourth set of tests - tried various combinations using alternate SATA ports with same results as second and third test sets. -Using original array member set + random additional Fifth test - added additional drive to a random SATA port. Original drive array members no longer seen in raid utility (interesting, didn't expect that). System halt before UEFI Sixth test - disconnected original raid array member drives one at a time until the system booted to Windows recovery screen. Wish it was a simple raid incompatibility between old and new boards, that would make sense, but this raid array was created and formatted using the 970m pro3 raid utility then failed in some strange way after 4 days of use causing the system to halt on boot. I've never seen anything like this happen before. Usually you would see an array member drive going bad but still the desktop would run although complain until you replaced the bad drive. In the past the AMD controllers always did well recovering from a degrade. It would be nice to know what happened and if it could be avoided. Could a corruption in the UEFI lead to this? The next test is to re-create the array and see if the same thing happens but I would very much like to save some if not all the work that was done so I don't have to start from scratch. |

Posted By: KingOfKatz

Date Posted: 22 Mar 2016 at 8:01am

|

Bummer it's dead  . Will no longer post. RMA time I guess. . Will no longer post. RMA time I guess. Just an FYI follow-up, after doing much research it turns out that corruption in the UEFI leading to a corruption in the raid definition is not all that uncommon. And there is a best practices recovery method. In most cases after performing a CMOS clear as outlined in the previous post in this thread, you can perform a simple redefinition of the raid array though the raid management utility as long as the drives are connected to the same sata ports as they were when the array was originally defined. Make sure you skip the format option though. This method will in most cases even work with raid 0 In some cases, however, you may need to use a sector editor or disk utility like test disk and boot from a live USB or CD to restore access to your raid array but it is doable. I did not see any Asrock specific posts, so I am guessing this procedure should be the same although I unfortunately did not get a chance first hand to test it. |

parsec wrote:

parsec wrote: