H110 pro+ btc gpu problem.

Printed From: ASRock.com

Category: Technical Support

Forum Name: Intel Motherboards

Forum Description: Question about ASRock Intel Motherboards

URL: https://forum.asrock.com/forum_posts.asp?TID=5781

Printed Date: 18 Jan 2026 at 9:59pm

Software Version: Web Wiz Forums 12.04 - http://www.webwizforums.com

Topic: H110 pro+ btc gpu problem.

Posted By: Kinowa

Subject: H110 pro+ btc gpu problem.

Date Posted: 04 Aug 2017 at 12:57pm

|

Hi I got new asrock h110 pro+ btc, 1600w, 5x1080ti connected with risers & 8+6 pin to each card, Windows 10, 8 gb memory, Samsung ssd. When i boot into Windows my gpus gets error code 43. Is there anything i need to change in settings in Windows or bios to get the 5 cards to work together? This is a video of device manager after booting in to Windows https://youtu.be/3IsvDnEy46o" rel="nofollow - Youtube |

Replies:

Posted By: parsec

Date Posted: 04 Aug 2017 at 9:49pm

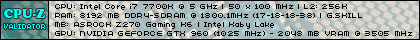

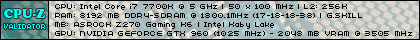

In the UEFI/BIOS, Chipset Configuration screen, is the Above 4GB MMIO BIOS assignment option set to Enabled? Also in the same screen, is the IOAPIC 24-119 Entries option set to Enabled? Did you connect power cables from your PSU to the three extra power connectors on the board? ------------- http://valid.x86.fr/48rujh" rel="nofollow">

|

Posted By: Kinowa

Date Posted: 05 Aug 2017 at 12:06am

|

" rel="nofollow - [URL=][/URL]Yes, both of them are set to Enable. As for the power to The motherboard, there is 1x24pin,8 pin power cable labelled as 'cpu' & 2x4pin and they are connected aswell. Is that the ones you talk of? |

Posted By: Kinowa

Date Posted: 05 Aug 2017 at 4:04am

|

" rel="nofollow - I tried to reinstall new on windows 8.1...... Same problem still... I have no clue what this code 43 means? there is no chance i got delivered 5 broken 1080ti.... as you see in the youtube clip, the cards is recognized and seems like they want to load in, but in the end all 5 gets code 43 it seems.

|

Posted By: parsec

Date Posted: 05 Aug 2017 at 10:10am

|

" rel="nofollow - A Device Manager 43 error is very generic, it could mean a broken device, or a driver problem, or other problems. So you tried to use all five cards installed at the same time, and you get this error? Did you install a driver for these cards? If you tried to get all five running at the same time, try just using only one or two at first. If that works, then add one more card, and then one more, etc. It is common when using many cards that you need to install them one at a time. You also are using the risers for the first time, right? So you don't know yet if everything is working right. ------------- http://valid.x86.fr/48rujh" rel="nofollow">

|

Posted By: Kinowa

Date Posted: 05 Aug 2017 at 10:42am

|

" rel="nofollow - I did try only one card yesterday, today i am going to try 1 card but different cards. Yes i try using both old and new driver. Is it drivers needed for there risers? I have another PC i can use to see if it works on that one, im playing games on that one and also use gtx 1080ti but different motherboard. |

Posted By: LennartB

Date Posted: 05 Aug 2017 at 8:24pm

| " rel="nofollow - I recently purchased an ASRock H110 Pro BTC+ as well, but am using 3x Radeon Vega Frontier GPU's. Kinowa, did windows automatically recognize your gpu's? I've been living on these forums (and the instruction manuals) for about 3 days and have tried just about everything, but still can't get it to recognize a single card. |

Posted By: Kinowa

Date Posted: 05 Aug 2017 at 9:46pm

|

It Will only recognize it as a video display component or what not until i Install nvidia, then after booting it will be labelled as nvidia gtx 1080ti Im thinking its the motherboard thats the problem, and its like no place to get help from niether =D Im going to connect The risers to my gaming pc later and see. If that works i will change Motherboard. |

Posted By: LennartB

Date Posted: 06 Aug 2017 at 5:51am

|

I'm thinking that it could be a motherboard problem as well. I'm about to create a post with everything that I've done(and failed) to resolve the issue. Unfortunately, it seems like our set up is so new that we're the only tech support, but I'll be sure to write about it here when I get everything working.

|

Posted By: Darktrop

Date Posted: 08 Aug 2017 at 5:24pm

|

Error code 43 is a riser problem. Change out the riser make sure all power is correct And WILL work absolutely. Problem with this MB is running more than 8 gpu on windows 10 I think enabling onboard video may solve the problem I have 8 gpu 1070's running on a 1000power supply Msi afterburner settings at 70% power drawing 900 watss hashing 239 mh/s ethereum on claymore |

Posted By: Darktrop

Date Posted: 08 Aug 2017 at 5:27pm

|

I have a Supox 250 MB ( same as biostar 250) with 12 Pcie slots running 8 gpu. More than 8 claymore crashes |

Posted By: Kinowa

Date Posted: 09 Aug 2017 at 1:59am

| I have 2 more risers unpacked up, on Frida or tomorrow ill change them and see if problem solves. |

Posted By: vortexfoto

Date Posted: 19 Aug 2017 at 6:16pm

|

hi guys i have a problem with h110 btc+. i have 4 asus p106 and 5 gtx 1060 6gb and 1 3gb. no matter what i do i cant use more than 8 gpu, if i install 9th, system freezes but i see on net that a lot of people have 8+ rigs and windows.... in linux i have problem using miners - using nicehash... looks like driver problem, i knw amd have opencl limit of 8 gpu, but for nvidia...... please help

|

Posted By: Osiris

Date Posted: 04 Sep 2017 at 1:27pm

| My first h81 pro btc was doa. It happens. |

Posted By: Midnytjoe

Date Posted: 19 Nov 2017 at 2:00pm

| " rel="nofollow - Hi Lenart, i'm having same issues as yours. Were u able to resolve your issues? Thanks |

Posted By: Midnytjoe

Date Posted: 19 Nov 2017 at 2:02pm

| " rel="nofollow - Hi kinowa, were u able toresolve your issues? |

Posted By: andresxz

Date Posted: 21 Nov 2017 at 9:56pm

| " rel="nofollow - Have you checked the virtual memory? I had a similar problem and increased the virtual memory to 16GB and I could solve it. |

Posted By: crowhead

Date Posted: 27 Nov 2017 at 11:08pm

| " rel="nofollow - Hi Guys, I have H110 BTC + MOBO RIGs running 8x 1070 under Win10. Most of them run ok, but few keep freezing randomly, but repeatedly-windows crashes and only button restart works. Display freezes as it is. I tried to change everything from risers to GPUs all at once to one by one. No pattern showed. One thing that is always the same is that from those 900 watts that it draws , when frozen it draws around 333 watts. Anybody experienced anything similiar with this configuration? Clock is 70% power,0toCPU,+500 to memory. |

Posted By: pjdouillard

Date Posted: 15 Dec 2017 at 6:41am

Well I have the same setup as you but with 8 x Zotac 1060 6GB. All are using the same bios version and I too am experimenting random freeze ranging from 1 hour to almost 24h under Windows 10. Among the cards I have some with Hynix, others with Micron and some with Samsung memory. The Samsung & Micro can be OC'd to +750 on memory clock, but the Hynix can sometimes stick with +500, then +400. I know the cards (whatever memory brand), freeze on other Win 10 systems I have tested, so I don't know what is really going on. Right now, I am testing the cards with no OC on anything : just stock settings and will see where it goes. Will post a follow up.

|

Posted By: pjdouillard

Date Posted: 19 Dec 2017 at 10:30pm

|

Well here is the follow up on this. I was able to run 1 x 40h and 1 x 32h straight with no issue with the 8 x 1060 at stock clock and 70% power. Then it froze like before (was mining Monero). Then I moved to other currencies and I can't get more than 2-3 hours of stability without having to power off manually via the power button (still with no OC). It is really beginning to be a problem as I can't leave my rig working alone as I don't know when it will freeze. Temperature is not an issue as all cards are running under 60C. I am drawing 800W only from the wall, so the 1200W PSU is more than enough. I don't think this board is able to handle 8 cards with no issues. I will remove some cards and retest again, but each day I feel like I should go buy something else that will provide more robustness.

|

Posted By: Robsth

Date Posted: 22 Dec 2017 at 5:18am

|

Hi guys , i entered here just to le you know i had the same problem ,i could not get more then 2 cards working . I turned off vtd in the bios and now it seems to work. I don´t know about the stability yet but hey it´s something you can try. So turn off virtual system options in the bios and it should work. ------------- yup |

Posted By: Robsth

Date Posted: 22 Dec 2017 at 5:22am

|

Ah yes i also have to manually install the drivers for each cards in the device manager. ------------- yup |

Posted By: pjdouillard

Date Posted: 22 Dec 2017 at 6:08am

Well, after removing 3 cards, my system is rock solid with the 5 remaining cards. Will try to add a sixth one and see how it goes.

|

Posted By: pjdouillard

Date Posted: 29 Dec 2017 at 1:55pm

" rel="nofollow -

Well I ended up adding 2 more cards instead, and the freezing came back. Everything is at stock clock and nothing is OC. This is really not what I was expected from a MB that is supposed to be able to run up to 13 GPUs. Is there something to check in the bios to be able to run more than 5 cards? MB has the 2 extra Molex power connected on it just as required so I don't understand what is happening. Should I open a ticket with Asrock?

|

Posted By: PetrolHead

Date Posted: 29 Dec 2017 at 3:16pm

" rel="nofollow -

It's not quite that simple. What PSU are you using? ------------- Ryzen 5 1500X, ASRock AB350M Pro4, 2x8 GB G.Skill Trident Z 3466CL16, Sapphire Pulse RX Vega56 8G HBM2, Corsair RM550x, Samsung 960 EVO SSD (NVMe) 250GB, Samsung 850 EVO SSD 500 GB, Windows 10 64-bit |

Posted By: pjdouillard

Date Posted: 10 Jan 2018 at 7:10pm

I found the online guide of the Asrock web site that indicated how to setup the board and I followed it. 1) Install 1st GPU in the x16 PCI slot. Install Driver. Reboot. Done. 2) Install 2nd GPU in the top most mini x1 slot. Let Windows install the driver on it. Reboot. Done. Did that up to the 7th card. Also, I have powered the MB with the SATA power (so that's 3 total with the 2 MOLEX). The system is now rock solid since then. Yesterday, I added an 8th card and problem has started to rise again. I have disabled the CPU on-board graphics to remain at 8 adapter in Windows, but the system still has issues with a display adapter become non-responsive and eventually freezing the whole thing after a few hours of running. All GPU are 1060 6GB (with either Samsung or Micron memory) at +125 Core, +700 Memory and Power at 70%. They draw 80-82W each. I am starting to think that Windows is the issue in all this.

|

Posted By: pjdouillard

Date Posted: 10 Jan 2018 at 7:12pm

What is not that simple? I am using a Corsair HX1200i. I has enough connectors to power the MB 3 times, and connect 8 GPU running each at 120W. Efficiency is 91-91% so I can't ask for better than this.

|

Posted By: Slcharger

Date Posted: 24 Jan 2018 at 2:00am

|

Read

this post, and want to add some info how my rig behaved. It may be relevant, or

not.

Asrock

H110 Pro 8

x Sapphire RX580 4G 5

X ASUS ROG STRIX 1070 Gaming 8G 1

x Corsair 650W PSU dedicated for MB and 3 GPU 1

x Corsair 850W PSU dedicated for MB and 5 GPU 1

x Corsair 850W PSU dedicated for MB and 5 GPU Intel

4770K CPU 2x4 GB

RAM Some

cheap Chinese risers.

Win

10 BIOS

stock settings, except auto start, and disable all unnecessary functions. Started

out with 8 x RX580. Installed all at once. Installed

AMD Blockchain driver. ( 17.30 ) Found

all cards in third attempt. Run

Claymore dual miner on Nanopool. Run

Rock steady 30 Mh/s dcri 28, for 3 month. Installed

5 ASUS ROG STRIX. Installed

Nvidia driver Installed

and run Nicehash GPU miner 2.0 Started

mining without any trouble, ( GPU + CPU ) tuned in ASUS GPU's

Then

the trouble started : After

4 hours windows froze. Looked

into Log file, and Error 41, Kernel Power. Changed

PSU, check all connections, nothing wrong. Restart,

same problem after 4 hours. Disconnected

PSU to ASUS GPU, restart, run rock steady only Claymore for 24 hours. Reconnected

ASUS PSU, same problem again. Downloaded

latest driver from Nvidia, did not help anything. Updated

Win to 1709, same problem still. Had

to reinstall AMD Blockchain driver again after WIN update. Checked

power consumption on PSU for MB, 450W, no issue. Wondered

if the CPU crashed because of MB not managing voltage correct. Changed

all auto voltage to manual. VCCIO

1.050 V PCH 1.200 V DRAM 1.300V Has

been running flawlessly now for one month, RX 30Mh/s + DCRI 28, ASUS 435 SOL/s.

|

Posted By: pjdouillard

Date Posted: 24 Jan 2018 at 2:18am

|

Well, I sold some of the GTX 1060 6GB lately and the number of cards left went down to under 7. No more issues. I really starting to question if this board is really able to handle more than 6 cards. You seem to be able to with your 8 AMD cards, so there must be something with Nvidia's drivers that induce those crash. Anyhow, I am moving away from this MB and will go Asus and Gigabyte from now on.

|

Posted By: Slcharger

Date Posted: 24 Jan 2018 at 2:42am

|

I know of others that run 13 x 1070 on this MB. on Ethos though, not WIN 10 I don't believe it's a driver issue, more likely hardware.

|

Kinowa wrote:

Kinowa wrote: